Cloudflare's Markdown for Agents Changes the Game — Is Your Site Ready for AI Agents?

目次

Key Takeaways: 5 Reasons AI Agent Optimization Just Became Mandatory

Cloudflare's February 2026 launch of "Markdown for Agents" isn't just a product release — it's a signal that the web is restructuring itself around AI consumption. After reviewing the technical documentation and early adoption data, here are the five things every site owner needs to understand.

- HTML-to-Markdown conversion cuts token usage by ~80% — Cloudflare's own benchmarks show the same page consuming ~16,000 tokens as HTML versus ~3,000 as Markdown. For AI agents processing thousands of pages, this is the difference between viable and cost-prohibitive

- Content-Signal headers let you declare AI usage policies at the HTTP level —

ai-train=yes,search=yes,ai-input=yesgive site owners machine-readable control over how AI systems use their content — a level of granularity robots.txt was never designed for - robots.txt alone can't control AI agent behavior — AI agents now negotiate content format via

Accept: text/markdownheaders, creating a new layer of interaction that sits alongside traditional crawl control - AI cloaking is a real and growing threat — Researchers have demonstrated that serving different content to Markdown-requesting agents versus human browsers is trivially easy, creating a "shadow web" problem that mirrors early SEO cloaking abuse

- You don't need Cloudflare to make your site AI-agent friendly — llms.txt adoption, Markdown API endpoints, structured data, and answer-first content design all work independently of any CDN provider

The rest of this article breaks down each point with technical detail and actionable implementation steps.

1. What Cloudflare's "Markdown for Agents" Actually Does

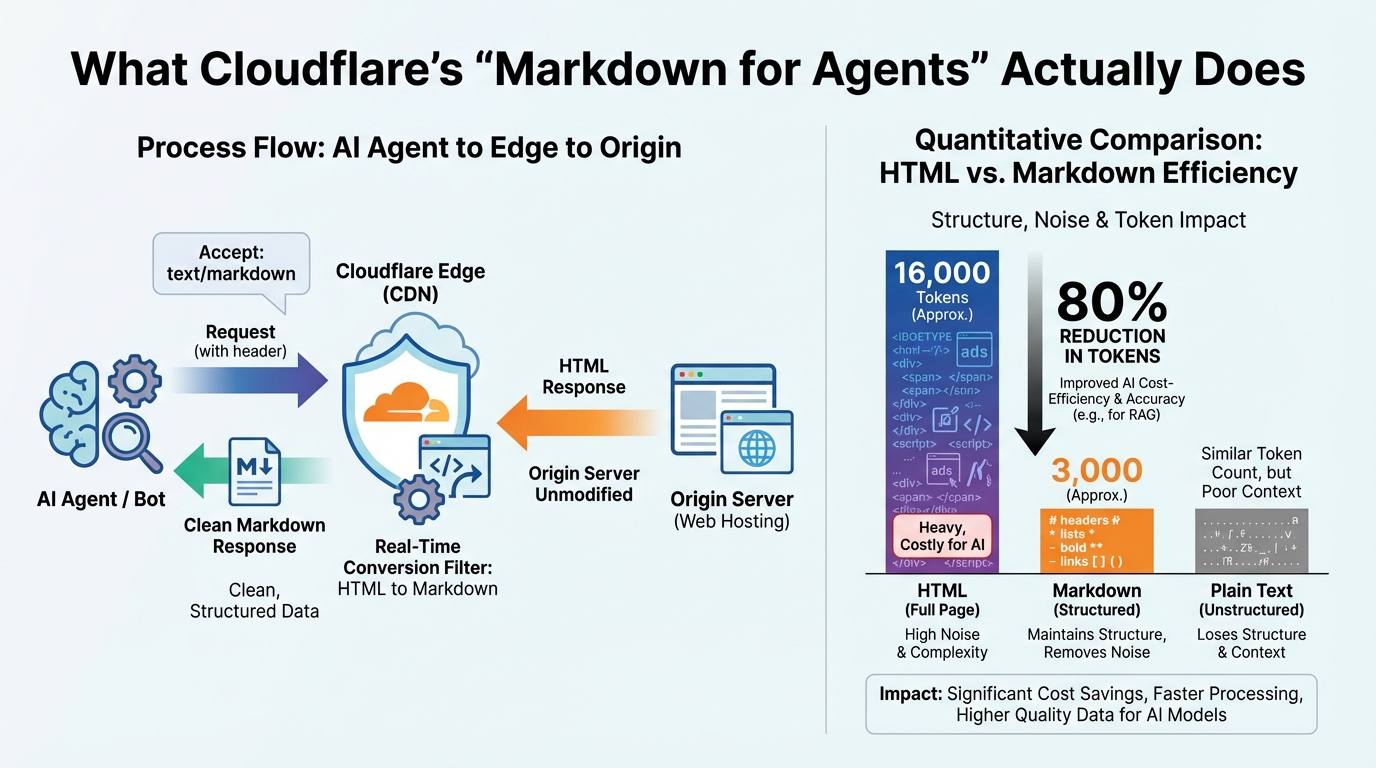

In February 2026, Cloudflare launched a feature that converts HTML pages to Markdown in real time at the CDN edge. Here's how it works in practice.

The Mechanism

When an AI agent sends an HTTP request with the Accept: text/markdown header, Cloudflare's edge network intercepts the response, strips the HTML down to its content structure, and returns clean Markdown. The origin server never knows this happened — it generates HTML exactly as before.

Why Markdown Matters for AI

Here's the thing: HTML is extraordinarily noisy from an AI agent's perspective. Navigation menus, footers, ad scripts, tracking pixels — none of it is useful for understanding page content. But it all consumes tokens.

Markdown preserves the content structure (headings, lists, tables, links) while eliminating everything else. Cloudflare's benchmarks tell the story clearly.

| Format | Tokens (same page) | Structure preserved | Noise level |

|---|---|---|---|

| HTML | ~16,000 | Yes | Very high |

| Markdown | ~3,000 | Yes | Minimal |

| Plain text | ~2,500 | Lost | Low |

That's an 80% reduction. For RAG systems processing hundreds of pages per query, this changes the economics entirely.

References: Markdown for Agents - Cloudflare Blog Cloudflare Markdown for Agents - nohackspod Cloudflare Markdown for Agents - Thunderbit

2. The Triple Impact of 80% Token Reduction

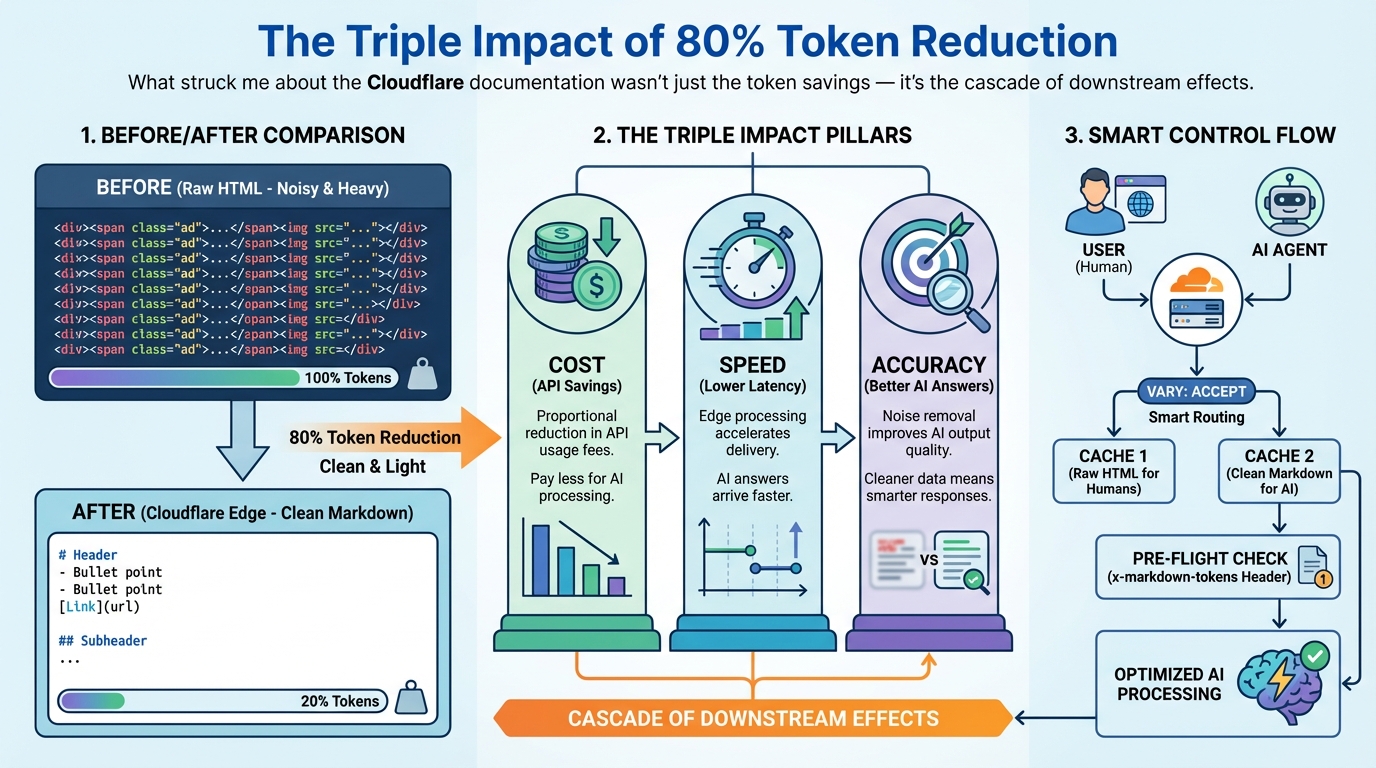

What struck me about the Cloudflare documentation wasn't just the token savings — it's the cascade of downstream effects.

Cost, Speed, and Accuracy

Cost: LLM API pricing scales linearly with token count. An 80% reduction in input tokens means 80% lower costs for every page an agent reads. For enterprise RAG systems processing thousands of documents, this translates directly to monthly infrastructure savings.

Latency: Smaller payloads mean faster network round trips. Since the conversion happens at Cloudflare's edge, there's zero additional load on the origin server — the optimization is fully transparent.

Accuracy: Less noise means better AI output. When HTML navigation text and footer boilerplate pollute the context window, AI responses degrade. Clean Markdown input produces cleaner, more relevant answers.

The x-markdown-tokens Header

Cloudflare's response includes an x-markdown-tokens header that tells the agent exactly how many tokens the Markdown content contains — before the agent processes it. This is genuinely useful: agents can now decide whether a page fits their context window before committing to ingestion.

Previously, an agent had to fetch the full page, tokenize it, and then decide if it was too large. Pre-flight token counts eliminate that waste.

Cache Separation via Vary: Accept

The Vary: Accept header automatically creates separate cache entries for HTML and Markdown responses. Human browsers get HTML, AI agents get Markdown — all handled transparently at the CDN layer with no origin-side logic required.

References: Markdown for Agents - Cloudflare Developers Cloudflare Now Converts Web Pages to Markdown - MediaCopilot Cloudflare Markdown for Agents - Search Engine Land

3. Content-Signal Headers and the New Privacy Frontier

The technical benefits of Markdown conversion are clear. But this technology introduces new challenges on both the security and privacy fronts.

Content-Signal: A Machine-Readable Usage Policy

Cloudflare introduced the Content-Signal HTTP header, which lets site owners declare three distinct usage permissions.

ai-train=yes/no— whether AI systems may use the content for model trainingsearch=yes/no— whether the content can appear in search resultsai-input=yes/no— whether AI may use the content as real-time input for responses

If robots.txt is a "no trespassing" sign for crawlers, Content-Signal is a license agreement for content usage. The distinction matters for GDPR and HIPAA compliance, where training usage and real-time inference usage carry different regulatory implications.

AI Cloaking: The Shadow Web Problem

On the flip side, the Accept: text/markdown header creates a new attack surface. Researchers have demonstrated that it's trivially easy to serve different content to Markdown-requesting agents than to human browsers.

The implications are serious. A product page could show accurate pricing to humans while feeding AI agents manipulated competitor comparisons. A news site could present factual reporting to browsers while injecting disinformation into AI-consumed Markdown. This mirrors the cloaking tactics that Google penalized in the early days of SEO — but the enforcement mechanisms for AI agents are still nascent.

Mitigation: Zero Trust and CI Verification

Cloudflare's AI Security Suite provides zero-trust policy enforcement, real-time audit logging, and prompt injection prevention. For site operators, the practical step is validating that HTML and Markdown outputs match — automated CI checks comparing both versions should become standard practice.

References: Cloudflare Markdown for Agents - QueryBurst Secure & Govern AI Agents - Cloudflare HackerNews Discussion on AI Cloaking Risks

LinkSurge

linksurge.jp

SEO・AIO・GEO統合分析プラットフォーム。AI Overviews分析、SEO順位計測、GEO引用最適化など、生成AI時代のブランド露出を最大化するための分析ツールを提供しています。

4. Making Your Site AI-Agent Friendly Without Cloudflare

If you're thinking "we don't use Cloudflare, so this doesn't apply to us" — don't skip this section. What Cloudflare's announcement really signals is that delivering content in AI-optimized formats has tangible value. You can achieve the same outcomes with your own infrastructure.

Strategy 1: Adopt llms.txt

llms.txt is an emerging standard — a text file placed at your site root that gives AI agents a structured overview of your site's content and organization. Think of it as a welcome guide for AI, complementing the access control that robots.txt provides.

Strategy 2: Build a Markdown API Endpoint

Create a server-side endpoint like /api/markdown?url=/blog/your-article/ that returns Markdown versions of your content. If you're using a static site generator, you likely already have Markdown source files — serving them directly is straightforward.

Strategy 3: Comprehensive Structured Data

JSON-LD implementation (Article, FAQPage, HowTo) remains the most reliable way for AI to understand your content's meaning and structure. Markdown conversion handles formatting; structured data handles semantics. You need both layers.

Strategy 4: Allow AI Crawlers in robots.txt

Explicitly permit major AI crawlers (GPTBot, PerplexityBot, ClaudeBot) in your robots.txt and keep your sitemap current. This is foundational — without crawler access, no amount of formatting optimization matters.

For a complete guide to AI crawler configuration and site structure optimization, see "AI Search Rewrote the SEO Playbook — 7 Tactics for 2026."

Strategy 5: Answer-First Content Structure

When AI generates responses, it preferentially references the first 40-60 words of each section. Structuring content with "Atomic Answers" at the start of every section is as effective as — or more effective than — serving Markdown format.

For platform-specific GEO implementation strategies and citation optimization tactics, see "The Complete GEO Guide."

References: How to Serve Markdown to AI Agents - dev.to Cloudflare The Secret Weapon for Building AI Agents - JustThink AI Don't Let Your AI Agents Go Rogue - Softprom

5. Your AI Agent Optimization Roadmap

Now that the priorities are clear, here's a practical execution sequence.

Phase 0: Baseline Assessment (1 Day)

- Run

curl -H "Accept: text/markdown"against your own site and see what comes back - Use LinkSurge's AI Overview analysis to check how your content currently gets cited by AI

- Verify your robots.txt doesn't block AI crawlers

Phase 1: Structured Data Implementation (1-2 Weeks)

- Add FAQPage, Article, and HowTo JSON-LD to high-traffic pages

- Place 40-60 word Atomic Answers at the start of each content section

- Update sitemap.xml to ensure full AI crawler coverage

Phase 2: Machine-Readable Format Delivery (2-4 Weeks)

- Create and deploy an llms.txt file

- Build a Markdown API endpoint (if you have the engineering resources)

- Add Content-Signal equivalent meta tags or HTTP headers

Phase 3: Measurement and Iteration (Ongoing)

- Monitor AI citation rates with LinkSurge's AI Overview analysis

- Track token efficiency improvements

- Update AI crawler permissions as new agents emerge

For a comprehensive strategy covering technical SEO, content, and link building, see the "Complete SEO Guide for 2026."

References: Best Practices SASE for AI - Cloudflare Blog Markdown for Agents - Cloudflare Developers Cloudflare Markdown for Agents - Search Engine Land

Frequently Asked Questions

Which Cloudflare plans support Markdown for Agents?

Pro, Business, and Enterprise plans. Activation is a single toggle in the dashboard under "Bots/AI," or via the API endpoint PATCH /client/v4/zones/{zone_tag}/settings/content_converter. Free plans don't have access.

Can I make my site AI-agent friendly without Cloudflare?

Yes. Deploy an llms.txt file, build a Markdown API endpoint, implement JSON-LD structured data, and allow AI crawlers in robots.txt. These strategies work with any hosting provider or CDN. Cloudflare automates the process, but manual implementation delivers equivalent results.

What's the difference between Content-Signal headers and robots.txt?

robots.txt controls crawler access — whether an agent can visit a page at all. Content-Signal controls content usage — what the agent is permitted to do with the content it retrieves. You might allow access but block training usage, or permit search indexing but deny real-time AI input. They're complementary controls operating at different levels.

What is AI cloaking, and how do I prevent it?

AI cloaking means serving different content to agents requesting Accept: text/markdown than to human browsers. It's the AI-era equivalent of search engine cloaking. Prevention requires automated CI checks comparing HTML and Markdown outputs for content parity, plus zero-trust policies for agent access. Cloudflare's AI Security Suite provides built-in protections against this.

Conclusion: AI Agent Readiness Is the New Technical SEO Baseline

Cloudflare's "Markdown for Agents" marks the moment when the web's infrastructure began formally adapting to AI consumption. Whether you use Cloudflare or not, the underlying shift is clear: sites that make their content easy for AI agents to consume will get cited more often.

Start with three things. Implement structured data, allow AI crawlers in your robots.txt, and restructure your content with answer-first design. These steps work regardless of your CDN or hosting stack.

LinkSurge's AI Overview analysis lets you track how your content gets cited across Google AI Overviews and ChatGPT in real time — a practical starting point for measuring the impact of your AI agent optimization efforts.