The Complete GEO Guide for 2026: How to Get Cited by ChatGPT, Gemini, Perplexity & Claude

目次

Key Takeaways: 5 Priorities for GEO Success in 2026

Over the past six months, I've systematically tested how ChatGPT, Perplexity, and Claude select their citation sources — and the differences between platforms are far more significant than most people realize. Content marketing in 2026 isn't just about ranking in search results — it's about getting cited by AI. Generative Engine Optimization (GEO) is the practice of making your content discoverable and quotable by ChatGPT, Gemini, Perplexity, Claude, and Google AI Overviews. Here are the five priorities that matter most.

- Structured data and prompt-friendly writing are the foundation of AI citation — Implement JSON-LD for FAQPage, Article, and Product schemas, and place a 40-60 word conclusion at the start of every section. This makes your content easy for RAG (Retrieval-Augmented Generation) pipelines to retrieve and cite accurately

- Each AI platform has a different citation mechanism — ChatGPT uses browsing with OAI-SearchBot (the plugin program was discontinued in 2024, replaced by custom GPT Actions), Gemini leverages Knowledge Graph integration, Perplexity auto-generates a Sources list, and Claude uses a document-level Citation API. You need platform-specific optimization for each

- Original research and explicit authority signals drive citation rates — AI models compare multiple sources when generating answers. Unique data, case studies, and clearly attributed author credentials give your content a decisive advantage over commodity information

- Compliance with copyright, privacy, and AI ethics is essential — Copyright still applies at the generation stage, data privacy laws like GDPR govern what can be indexed, and AI governance frameworks require transparency and source attribution

- Citation Rate is your primary KPI, and phased execution is the path to results — Measure how often AI-generated answers include your URL, then follow a 5-phase implementation plan to systematically improve that metric over time

The sections below break down each priority with technical details, platform-specific tactics, and a step-by-step implementation roadmap.

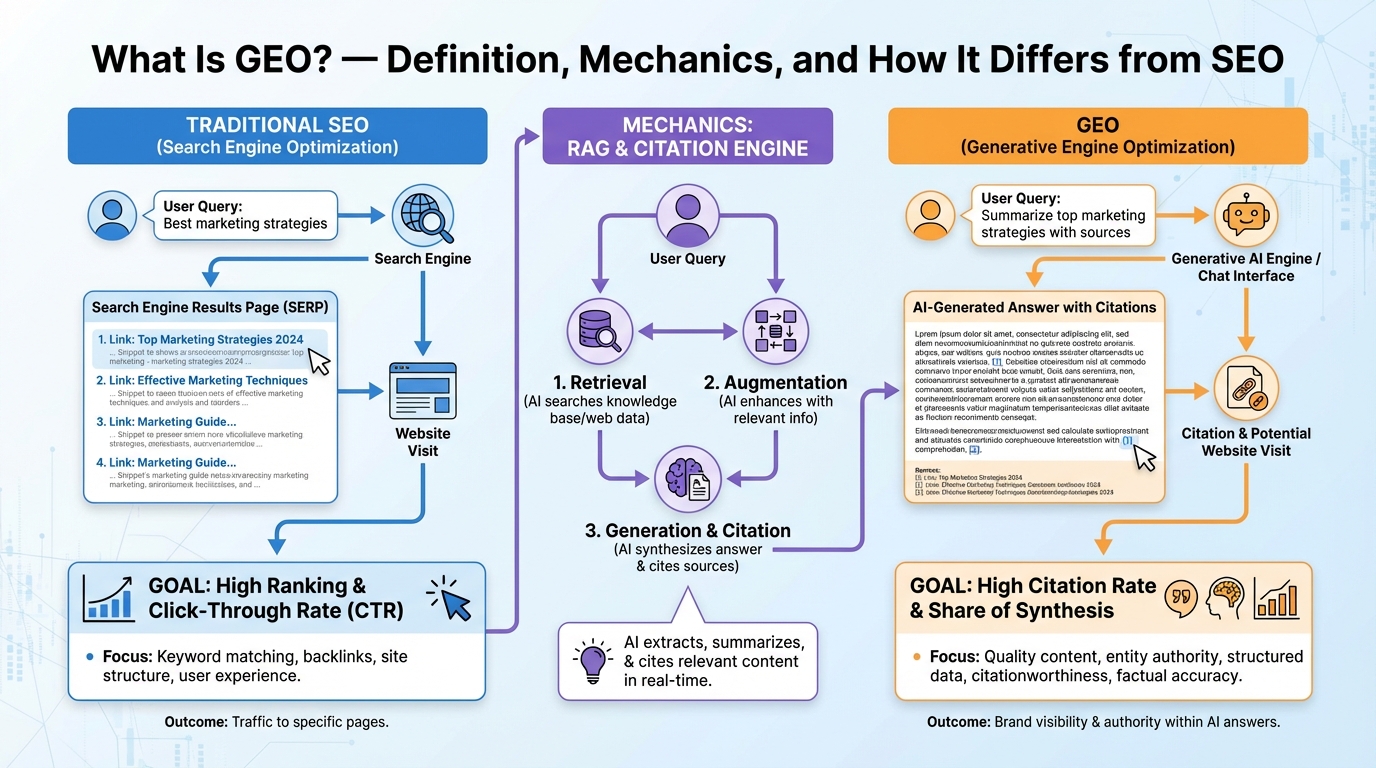

1. What Is GEO? — Definition, Mechanics, and How It Differs from SEO

Defining Generative Engine Optimization

GEO (Generative Engine Optimization) is the practice of structuring your content so that AI-driven search engines — ChatGPT, Google Gemini, Perplexity, Anthropic Claude, and Google AI Overviews — retrieve it, understand it, and cite it when generating answers to user queries.

While SEO focuses on ranking higher in a list of links, GEO focuses on being quoted within AI-generated answers. The goal shifts from click-through optimization to extraction friendliness.

Why GEO matters now

This is something I've seen firsthand in client projects — sites with strong traditional SEO rankings are losing traffic to competitors who optimize specifically for AI citation. Zero-click searches are accelerating rapidly. When AI Overviews appear, organic CTR drops by an estimated 50-60% (Ahrefs, 12/2025; Seer Interactive, 11/2025), and roughly 58.5% of Google searches end without a click to any external site (SparkToro / Datos, 07/2024). Traditional SEO alone can no longer sustain organic traffic. GEO is the strategy that secures your brand's visibility in this new search environment. For a deeper look at how AI Overviews and AI Mode are reshaping organic search, see our guide on how AI search is transforming SEO strategy.

The two technical pillars behind GEO

To understand GEO, you need to know how AI generates answers in the first place.

RAG (Retrieval-Augmented Generation) is the mechanism where an AI model retrieves external information at query time and injects it into the response. Instead of relying solely on training data, the model searches the web, fetches the most relevant content, and combines it into an answer. GEO optimization targets this retrieval step — making your content the one that gets pulled in.

Citation Engine is the mechanism that automatically attaches source URLs or references to AI-generated answers. ChatGPT's inline browsing links, Perplexity's "Sources" tab, Claude's Citation API, and Google AI Overview's source cards all use some form of citation engine. Getting recognized by the citation engine is GEO's ultimate goal.

| Dimension | SEO | GEO |

|---|---|---|

| Optimization target | Search engine ranking algorithms | AI's RAG pipeline + Citation Engine |

| Primary KPIs | Keyword rankings, organic CTR | Citation Rate, Share of Synthesis |

| Content design | Keyword density, backlink profile | Machine-readable structure, Q&A format, schema markup |

| How results appear | Listed on the search results page | Cited within AI-generated answers |

References: Generative Engine Optimization — CrafterCMS GEO vs Traditional SEO Guide — Strapi What Is Generative Engine Optimization — Frase

2. Technical Foundations for AI Citation

With the conceptual framework in place, let's move to the technical implementation. Getting cited by AI requires six technical building blocks. These work together to make your content easy for RAG pipelines to discover, parse, and reference.

Structured data (JSON-LD) implementation

Schema.org markup in JSON-LD format is the most direct way to help AI understand your content's meaning. FAQPage, Article, Product, and HowTo schemas tell retrieval systems exactly what type of information your page contains and how it's organized.

<script type="application/ld+json"> { "@context": "https://schema.org", "@type": "FAQPage", "mainEntity": [{ "name": "What is generative engine optimization?", "acceptedAnswer": { "text": "GEO is the practice of optimizing content to be cited by AI search engines like ChatGPT, Gemini, and Perplexity." } }] } </script>

For Perplexity in particular, FAQ schema has the highest impact on citation frequency — add it to every Q&A block on your site.

Answer-first paragraph design

In my direct testing with Perplexity and Claude, the difference between articles that lead with a conclusion and those that don't is striking — the former get cited significantly more often. Place a self-contained answer (40-60 words) at the start of each section. AI models prioritize section openings when scanning for quotable content, so a "conclusion first" structure significantly increases your citation probability. Follow the opening answer with supporting data, examples, and source citations.

How should you structure headings for AI citation?

Use H2/H3 headings phrased as natural-language questions (e.g., "What is generative engine optimization?" rather than "GEO Overview"). This matches the sub-query extraction pattern that LLMs use when breaking down complex user questions.

Content chunking for RAG

Break your content into blocks of 800 tokens or fewer, each with its own heading and URL anchor. RAG systems achieve higher retrieval accuracy with well-segmented chunks than with long, unbroken text.

AI crawler control

Set explicit permissions in robots.txt for GPTBot, PerplexityBot, and other AI crawlers. Pages you want cited should be clearly allowed; pages you don't want indexed by AI should be explicitly blocked.

Cloudflare's "Markdown for Agents" takes this further by serving AI-optimized Markdown directly at the CDN edge. For implementation details and what this shift means for your GEO strategy, see "Cloudflare's Markdown for Agents and AI-Ready Web Optimization."

Prompt-engineering signals for content creators

Include a short "prompt hint" in your meta description that mirrors likely user questions (e.g., "How does GEO differ from SEO?"). This guides the model's retrieval scoring. Also, use chain-of-thought-style explanations — step-by-step reasoning increases Claude's willingness to cite the page.

| Tactic | Priority | Difficulty | Impact on AI citation |

|---|---|---|---|

| JSON-LD structured data | Highest | Medium | Directly increases citation rate |

| Answer-first paragraphs | Highest | Low | Major lift in citation probability |

| Content chunking | High | Low | Improves RAG retrieval accuracy |

| AI crawler control | High | Low | Ensures target pages are discoverable |

| Prompt-engineering signals | Medium | Low | Guides retrieval scoring |

| Cache control | Medium | Low | Improves crawl efficiency |

References: Structured Data Overview — Google Developers GEO vs Traditional SEO — Strapi LLM Schema Optimisation Matters — ZC Marketing Prompt Engineering Techniques — IBM

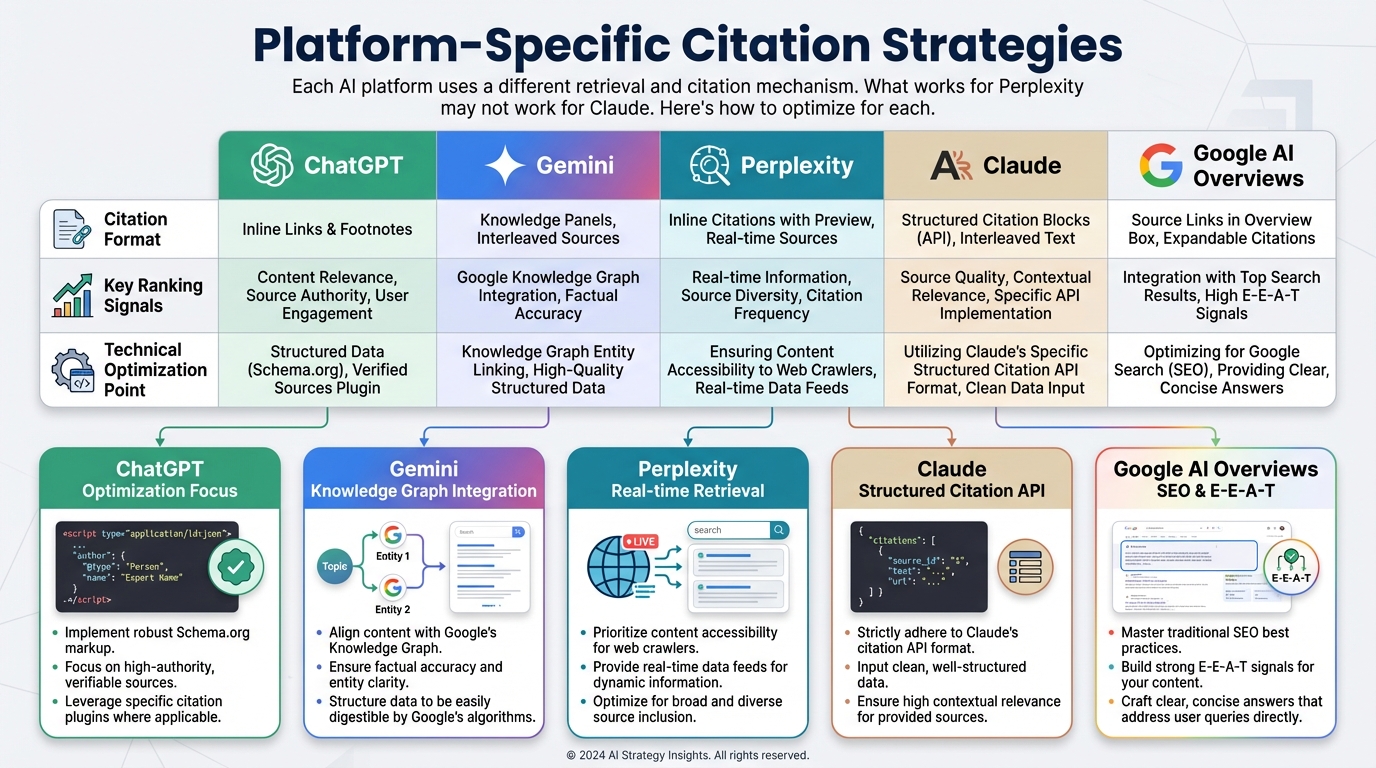

3. Platform-Specific Citation Strategies

This is the part of GEO I find most fascinating. Each AI platform uses a different retrieval and citation mechanism. What works for Perplexity may not work for Claude. Here's how to optimize for each.

ChatGPT (OpenAI)

ChatGPT uses web search in its browsing mode and displays inline links as source citations. Freshness is a strong signal — include a visible "Last Updated" timestamp on every page. Allow the OAI-SearchBot crawler in your robots.txt so ChatGPT search can discover your pages. Note that the original ChatGPT plugin program was discontinued in April 2024; the replacement is custom GPTs with Actions. Ensure your pages are indexable by both Bing and Google, as ChatGPT's search infrastructure draws from multiple sources.

Google Gemini

Gemini breaks complex queries into multiple sub-queries (averaging 6-10 per prompt) and pulls the most authoritative source for each. It heavily leverages Google's Knowledge Graph, so sameAs, Organization, and Person markup directly influence citation. Grounding sources appear in the API response at groundingMetadata.groundingChunks[].web.uri — not a sourceUrl field. Focus on three areas: information density, multimodal integration (images, videos with schema), and real-time signals through weekly data updates.

Perplexity

Perplexity auto-generates a clickable "Sources" list beneath every answer. Its Sonar retrieval engine favors fresh, well-cited, FAQ-style content. Publish explicit question-answer pairs — the model extracts these directly. Maintain weekly content refreshes, as recency is Perplexity's top ranking factor. Use canonical URLs and avoid JavaScript-only rendering because PerplexityBot cannot execute JS.

Claude (Anthropic)

Claude's Citation API returns structured citation blocks that reference specific passages within documents provided in the request (using character or page locations), rather than generating footnotes with external URLs. Citations are enabled per-document by setting citations: {"enabled": true} on each document content block — not via the system prompt. One key limitation: Citations cannot be combined with Structured Outputs — the API returns a 400 error if both are enabled. Claude places greater weight on explicit author expertise — detailed author bios with credentials, organizational authority, and logical reasoning chains (if-then, cause-effect structures) are critical.

Google AI Overviews

Keep answer blocks under 2-3 sentences and place them immediately after matching headings. Use FAQ and HowTo schema to map directly to the Overview's answer extraction pipeline. Monitor max-snippet and nosnippet directives — avoid blocking the AI from pulling excerpts from your pages.

| Platform | Citation format | Top signal | Integration point |

|---|---|---|---|

| ChatGPT | Inline browsing links | Freshness + OAI-SearchBot crawlability | robots.txt + Bing/Google indexing |

| Gemini | groundingChunks[].web.uri + Knowledge Graph | JSON-LD author / datePublished | Google Cloud Console |

| Perplexity | Clickable Sources list | Recency + FAQ format | robots.txt PerplexityBot allow |

| Claude | Structured citation blocks | Author credentials + reasoning chains | Anthropic Console |

| AI Overviews | Source cards | FAQ/HowTo schema + concise answers | Google Search Console |

References: Overview of OpenAI crawlers — OpenAI Platform Grounding with Google Search — Gemini API Citations — Anthropic API Docs Perplexity SEO — Metronyx AI Features — Google Developers

4. Content Strategies That Get Cited by AI

What kind of writing gets cited?

Having analyzed the writing style of frequently-cited pages across multiple AI engines, I've identified a consistent pattern. The most cited content is conversational yet fact-dense. Use short sentences, active voice, and embed statistics every 150-200 words. Avoid filler and hedge language. AI models compare multiple sources and favor the one that delivers the clearest, most data-rich answer.

Target high-intent, answerable questions

Focus on queries that generate sub-queries — for example, "best project management tool for remote teams" rather than just "project management tools." Create pillar content covering the full topic (2,500-3,000 words) to satisfy ChatGPT's preference for comprehensive resources.

Why does original research matter for GEO?

Include original research, proprietary case studies, or unique datasets. Perplexity and Claude heavily weight primary sources that can't be found elsewhere. A financial research blog that added author bios with 15-year industry credentials and embedded primary-source links saw Claude citations jump from under 1% to 8% of relevant queries.

Citation practice that AI models recognize

Cite primary, authoritative sources inline — hyperlinked immediately after the claim. Use DOI or URL format and avoid footnotes that are hidden from the model. Prefer government, academic, or industry-standard publications. Claude explicitly looks for "credible, verifiable content."

Don't forget multimodal assets

Add infographics, videos with full transcripts, and ImageObject schema. Gemini shows a 3.7x higher citation rate for pages with rich media. Alt text and structured metadata for visual content are no longer optional — they're a competitive advantage.

| Aspect | Best practice |

|---|---|

| Writing style | Conversational, fact-dense, short sentences, active voice |

| Topic selection | High-intent answerable questions, pillar content (2,500-3,000 words) |

| Depth and originality | Original research, case studies, proprietary data |

| Citation practice | Inline hyperlinks immediately after claims, prefer authoritative sources |

| Multimodal content | Infographics + video transcripts + ImageObject schema |

References: Generative Engine Optimization — LLM Refs Claude AI Citation Optimization Guide — TrySight Optimizing Content for LLMs — Page Optimizer Pro Perplexity Search Visibility Tips — Wellows

LinkSurge

linksurge.jp

SEO・AIO・GEO統合分析プラットフォーム。AI Overviews分析、SEO順位計測、GEO引用最適化など、生成AI時代のブランド露出を最大化するための分析ツールを提供しています。

5. Compliance, Ethics, and Legal Considerations

GEO doesn't operate in a legal vacuum. As you optimize content for AI citation, you need to navigate copyright law, data privacy regulations, and AI ethics frameworks.

Copyright and AI-generated content

Copyright applies at the generation stage. If AI output is substantially similar to a copyrighted work and the model relied on that work, infringement claims can arise. Use only content you own or have permission to republish. Cite sources properly and avoid uploading full copyrighted materials for AI citation without rights.

Data privacy and GDPR compliance

Don't expose personal or confidential data in public pages — AI crawlers will index it. Follow GDPR and regional privacy laws for any content that could contain personally identifiable information. If you're building custom RAG pipelines, anonymize or hash all PII before vectorization.

Attribution and transparency

Disclose any AI-assisted drafting in accordance with publisher policies. Major publishers (ACM, Elsevier, and others) now require AI-assistance notes. This transparency builds trust with both human readers and AI models that evaluate source credibility.

Ethical sourcing of information

Prefer peer-reviewed, government, or industry-standard data. AI models down-rank content from biased or low-credibility sites. Claude's constitutional AI framework explicitly penalizes unverifiable statements, so fact-check every claim and include the source URL next to each statistic.

| Requirement | How to meet it |

|---|---|

| Copyright | Use owned content or licensed material; cite sources properly |

| Data privacy | Follow GDPR; anonymize PII before vectorization |

| Attribution | Disclose AI-assisted drafting per publisher policies |

| Misinformation | Fact-check all claims; include source URLs inline |

| Ethical sourcing | Prefer peer-reviewed, government, or industry-standard data |

References: AI Best Practices — FIT NYC Ethical Use of AI — PRSA AI in Academic Research — Purdue Libraries

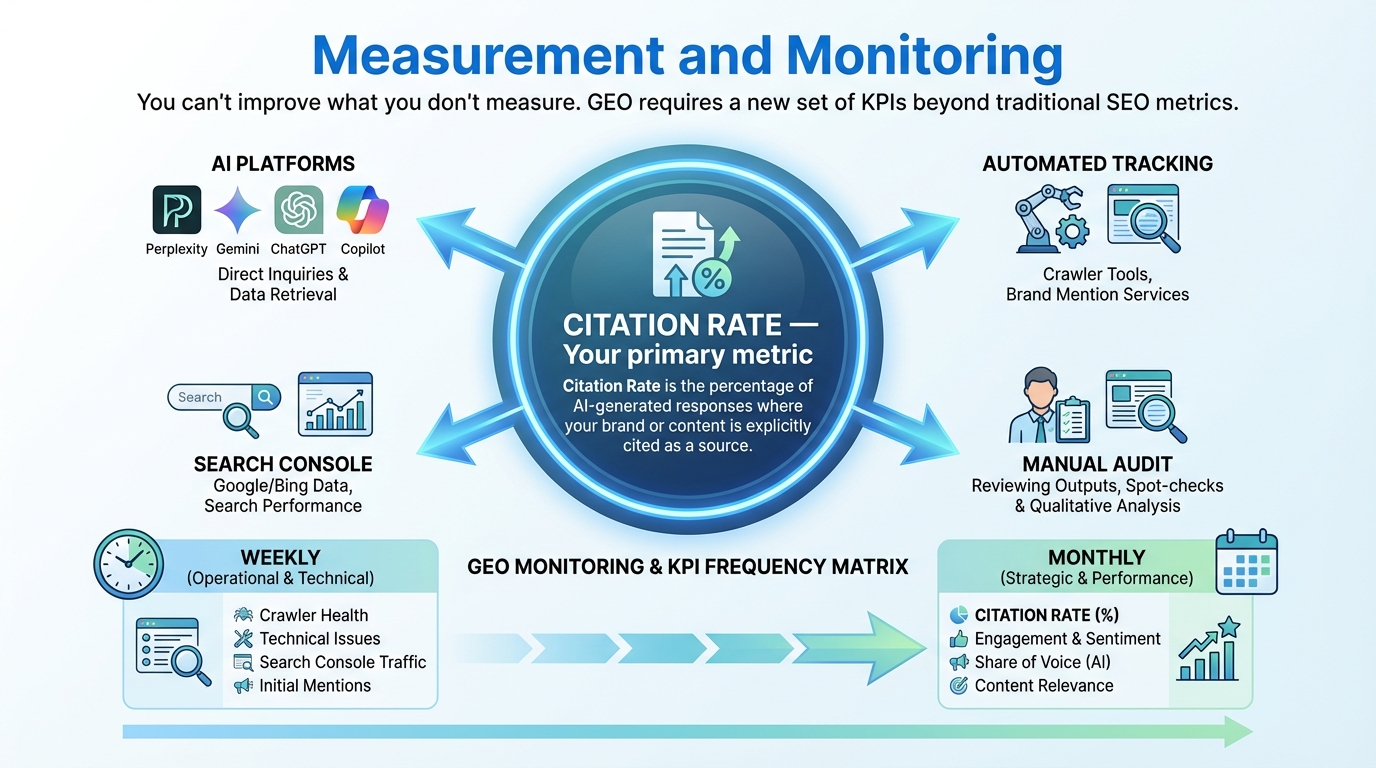

6. Measurement and Monitoring

Implementing GEO tactics is only half the equation — without a measurement framework, you have no way to know what's working. GEO requires a new set of KPIs beyond traditional SEO metrics.

Citation Rate — Your primary metric

Citation Rate is the percentage of AI-generated answers that include your URL. Track it across Perplexity's Sources list, Claude's Citation API, Gemini's groundingChunks[].web.uri, and ChatGPT's inline links. This is the single most important GEO metric to monitor monthly.

AI visibility tracking tools

Platforms like LinkSurge, OtterlyAI, KIME, and Rankscale automatically query major LLMs and record brand mentions, citation position, and sentiment. These tools save hours of manual prompt auditing and provide trend data over time.

Search Console integration

Enable AI Overview reporting in Google Search Console to see which queries trigger AI Overviews and which URLs are used as sources. This connects your GEO efforts directly to Google's AI search data.

Custom prompt audits

Run a set of high-intent prompts (e.g., "What is GEO?" or "best SEO tools for 2026") in each AI tool weekly and log the returned sources. This manual audit catches gaps that automated tools may miss.

| Metric | How to measure | Frequency |

|---|---|---|

| Citation Rate | AI answer URL tracking across platforms | Monthly |

| Top-3 Source Position | Whether your page appears in the first 3 citations | Monthly |

| Referral Traffic from AI | UTM-tagged clicks from AI-generated answers | Weekly |

| AI Crawler Access | Server log analysis for GPTBot, PerplexityBot, ClaudeBot | Weekly |

| Engagement from AI visitors | Time on page, bounce rate for AI-referred sessions | Monthly |

References: OtterlyAI — AI Visibility Tracking Best AI Visibility Tools — SE Ranking AI SEO Tracking Tools 2026 — Search Influence

7. Real-World Case Studies

These examples show how different types of organizations have used GEO tactics to increase AI citations.

SaaS productivity suite — Gemini citations up 3.7x

A B2B SaaS company rewrote its pillar article to 2,800 words, added high-density data tables, ImageObject schema, and weekly KPI updates. Gemini citations increased 3.7x, and AI-generated click-through rose 15%.

Healthcare guidelines site — 42% traffic growth from Perplexity

A healthcare information site built a comprehensive FAQ with inline citations, refreshed content monthly, and implemented full Article schema. The site achieved 5 citations per Perplexity answer on average, and referral traffic from Perplexity grew 42% in three months.

Financial research blog — Claude citations from <1% to 8%

A financial research blog added author bios with 15-year industry experience, structured content with logical reasoning chains, and embedded primary-source links directly after each claim. Claude cited the blog in 8% of relevant queries, up from under 1% before optimization.

E-commerce product pages — Featured in 12 AI Overview queries

An e-commerce retailer integrated HowTo schema for product selection guides, concise answer blocks, and Product schema with GTIN identifiers. The pages were featured in AI Overviews for 12 product-related queries, capturing 38% of SERP clicks.

| Case | Platform | Key tactics | Result |

|---|---|---|---|

| SaaS productivity suite | Gemini | Pillar content + data tables + ImageObject schema | 3.7x citation increase |

| Healthcare guidelines | Perplexity | FAQ + inline citations + monthly refresh | 42% traffic growth |

| Financial research blog | Claude | Author bios + reasoning chains + primary sources | Citations from <1% to 8% |

| E-commerce product pages | AI Overviews | HowTo schema + answer blocks + Product schema | Featured in 12 queries |

References: Visibility in Gemini — Appear on AI Perplexity Search Visibility — Wellows Claude AI Citation Optimization — TrySight AI Overviews Ranking Guide — Gryffin

8. Phased Implementation Plan

GEO isn't a one-time project — it's a systematic rollout. This 5-phase plan takes you from quick wins to a fully operational AI visibility strategy.

Phase 0: Baseline Analysis (Week 1)

- Build a list of target keywords and high-intent questions for your domain

- Audit competitor AI citations by searching your category in Perplexity, Claude, and ChatGPT — tools like LinkSurge can track AI Overview appearances across keywords

- Measure your current Citation Rate to establish a baseline

Phase 1: Technical Foundation (Weeks 2-3)

- Implement JSON-LD for

FAQPageandArticleon your highest-traffic pages - Set canonical URLs and configure

robots.txtto allow AI crawlers - Achieve Core Web Vitals targets (LCP < 2.5s, INP < 200ms, CLS < 0.1)

- Add "Last Updated" timestamps visible on every page

Phase 2: Content Optimization (Weeks 4-6)

- Rewrite the first 200 words of key pages to include a direct answer and inline citation

- Create FAQ pages for each core topic with question-based H2s and FAQ schema

- Add multimodal assets (infographics, short videos) tagged with ImageObject and VideoObject schema

- Chunk long-form content into 800-token blocks with unique headings and URL anchors

Phase 3: Platform Integration (Weeks 7-10)

- Allow OAI-SearchBot and PerplexityBot in

robots.txt - Validate schema with Google's Rich Results Test

- Implement Author/Person schema with detailed credentials for all bylines

- Set up weekly content refresh calendar for time-sensitive pages

Phase 4: Measurement and Continuous Improvement (Ongoing)

- Deploy an AI visibility dashboard (LinkSurge, OtterlyAI, or similar) to monitor citation trends

- Run weekly custom prompt audits across all major AI platforms

- Build entity homepages (brand, product, expert) interlinked with breadcrumb and

sameAsproperties - Publish original research on third-party domains (industry journals, reputable news sites) to create a citation network

For a comprehensive view of technical SEO, content strategy, and link building that forms the foundation for GEO, see our complete SEO guide for 2026.

References: Generative Engine Optimization — LLM Refs Generative Search Optimization — Quickchat AI AI Overviews — Google Developers

Frequently Asked Questions

What is the difference between GEO and SEO?

SEO targets search engine ranking algorithms, optimizing for keyword positions and organic click-through rates. GEO targets the retrieval and citation mechanisms of generative AI, optimizing for Citation Rate and Share of Synthesis. The most effective strategy layers GEO on top of a solid SEO foundation — they're complementary, not competing approaches.

What is the minimum you need to do to start GEO?

Start with two things: implement JSON-LD for FAQPage and Article schemas, and rewrite section openings to include a 40-60 word direct answer. These two changes alone can meaningfully increase your AI citation rate. Add robots.txt permissions for AI crawlers at the same time for maximum impact.

How do you get cited by Perplexity?

Allow PerplexityBot in your robots.txt, structure content in explicit question-answer pairs, and refresh it weekly. Perplexity's Sonar model prioritizes recency and FAQ-formatted content. Strong E-E-A-T signals — particularly visible author credentials and publication dates — also increase your citation probability.

Does GEO work for small businesses, not just enterprises?

Yes. Many GEO tactics — FAQ schema, answer-first paragraphs, robots.txt configuration — require no budget, just deliberate content structuring. A small web agency that implemented structured data and local information optimization was cited in AI Overviews regardless of its search ranking position. Scale matters less than structure.

How do you measure GEO success?

Track Citation Rate (the percentage of AI answers that cite your URL) as your primary KPI. Use tools like OtterlyAI or Rankscale for automated tracking, supplement with weekly manual prompt audits, and monitor AI crawler access in your server logs. Google Search Console's AI Overview reporting connects your data directly to Google's AI search pipeline.

Conclusion: Start with Schema and Answer-First Content

GEO extends traditional SEO by targeting how AI finds and cites your content. Get the structured data right, lead every section with a direct answer, keep your content fresh, and build credible authority signals. Each platform rewards slightly different signals — but those fundamentals apply across the board.

The fastest path to results is Phase 0 (audit competitor AI citations) and Phase 1 (JSON-LD implementation plus robots.txt configuration). These steps take 1-2 weeks and deliver measurable improvements in Citation Rate. From there, layer on content optimization, platform integration, and continuous measurement to stay ahead as AI search continues to evolve.

Brand mentions beyond your own site are a critical — and often overlooked — driver of GEO success. For the data behind this shift and a step-by-step playbook, see "91% of AI Answers Come from Sites That Aren't Yours."

LinkSurge's AI Overview analysis lets you track your brand's citation status across Google AI Overviews and ChatGPT in real time. It's a practical starting point for measuring and improving your GEO performance.